Miroslav Bachinski

(old writing: Myroslav Bachynskyi)

I'm an Associate Professon in the field of Human-Computer Interaction (HCI) and the HCI research group leader at the Department of Information Science and Media Studies at the University of Bergen.Before coming to Bergen I was a Postdoctoral researcher at the University of Bayreuth and at the University of Aarhus in the team of Prof. Jörg Müller, and Postgraduate research visitor at the University of Glasgow in the team of Prof. Roderick Murray-Smith.

I was awarded PhD by the the Department of Computer Science at the University of Saarland, Cluster of Excellence on "Multimodal Computing and Interaction", and the Max Planck Institute for Informatics for the thesis: "Biomechanical models for Human-Computer Interaction", supervised by Prof. Dr. Antti Oulasvirta and Prof. Dr. Jürgen Steimle.

My research focuses on development and application of data-driven methods to diverse research and design problems, including improvement of post-desktop user interfaces with large space of alternative designs (e. g. Virtual Reality, Levitation), development of wearable sensor systems, development of digital biomarkers for early diagnosis of dementia, and development of assistive technologies. I adopt machine learning and artificial intelligence technologies, optical motion capture, movement dynamics modeling, biomechanical modeling and simulation besides standard HCI methods to formalize the design space in mathematical models and find optimal designs.

Research Projects and Publications

Peer-reviewed papers

What simulation can do for HCI research

R. Murray-Smith, A. Oulasvirta, A. Howes, J. Müller, A. Ikkala, M. Bachinski, A. Fleig, F. Fischer, M. Klar

Interactions 2022

Insights:

[pdf]

R. Murray-Smith, A. Oulasvirta, A. Howes, J. Müller, A. Ikkala, M. Bachinski, A. Fleig, F. Fischer, M. Klar

Interactions 2022

Insights:

- Simulation-based methods are developing rapidly, changing theory and practice in applied fields.

- Simulations help create and validate new HCI theory, making design and engineering more predictable, and improving safety and accessibility.

- Emulation of user behaviour with generative models tests our understanding of an interactive system.

- Simulation-based intelligence can be created by directly embedding models in intelligent interactive systems.

- Model-based evaluation provides insights into usability before user-testing, saving money, time, and discomfort.

[pdf]

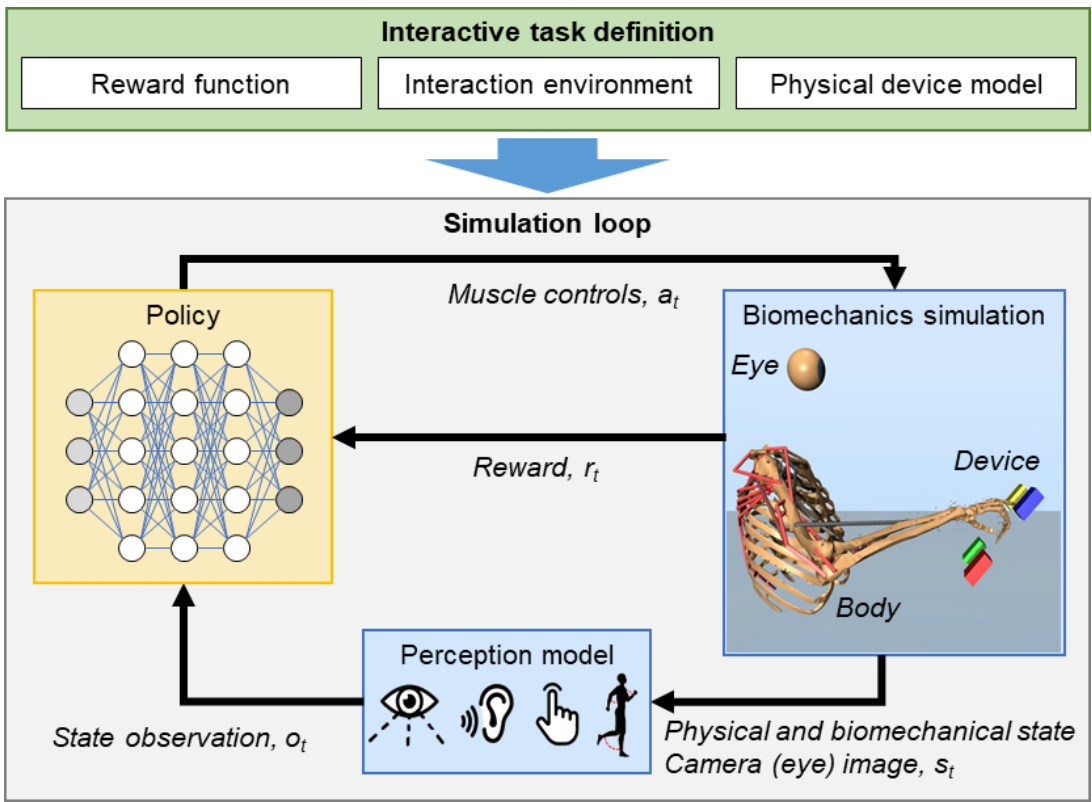

Breathing life into biomechanical user models

A. Ikkala, F. Fischer, M. Klar, M. Bachinski, A. Fleig, A. Howes, P. Hämäläinen, J. Müller, R. Murray-Smith, A. Oulasvirta

ACM UIST 2022

Forward biomechanical simulation in HCI holds great promise as a tool for evaluation, design, and engineering of user interfaces. Although reinforcement learning (RL) has been used to simulate biomechanics in interaction, prior work has relied on unrealistic assumptions about the control problem involved, which limits the plausibility of emerging policies. These assumptions include direct torque actuation as opposed to muscle-based control; direct, privileged access to the external environment, instead of imperfect sensory observations; and lack of interaction with physical input devices. In this paper, we present a new approach for learning muscle-actuated control policies based on perceptual feedback in interaction tasks with physical input devices. This allows modelling of more realistic interaction tasks with cognitively plausible visuomotor control. We show that our simulated user model successfully learns a variety of tasks representing diferent interaction methods, and that the model exhibits characteristic movement regularities observed in studies of pointing. We provide an open-source implementation which can be extended with further biomechanical models, perception models, and interactive environments.

[pdf]

A. Ikkala, F. Fischer, M. Klar, M. Bachinski, A. Fleig, A. Howes, P. Hämäläinen, J. Müller, R. Murray-Smith, A. Oulasvirta

ACM UIST 2022

Forward biomechanical simulation in HCI holds great promise as a tool for evaluation, design, and engineering of user interfaces. Although reinforcement learning (RL) has been used to simulate biomechanics in interaction, prior work has relied on unrealistic assumptions about the control problem involved, which limits the plausibility of emerging policies. These assumptions include direct torque actuation as opposed to muscle-based control; direct, privileged access to the external environment, instead of imperfect sensory observations; and lack of interaction with physical input devices. In this paper, we present a new approach for learning muscle-actuated control policies based on perceptual feedback in interaction tasks with physical input devices. This allows modelling of more realistic interaction tasks with cognitively plausible visuomotor control. We show that our simulated user model successfully learns a variety of tasks representing diferent interaction methods, and that the model exhibits characteristic movement regularities observed in studies of pointing. We provide an open-source implementation which can be extended with further biomechanical models, perception models, and interactive environments.

[pdf]

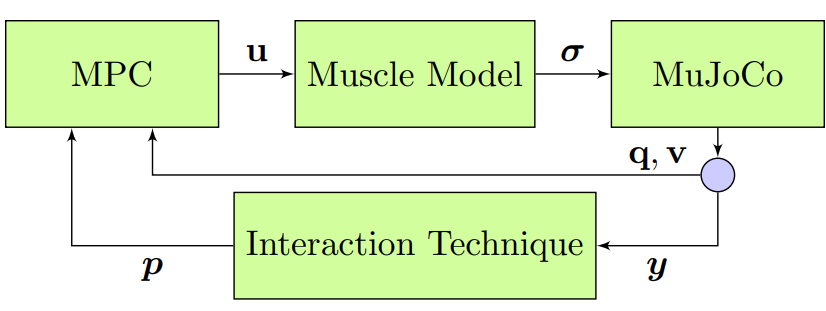

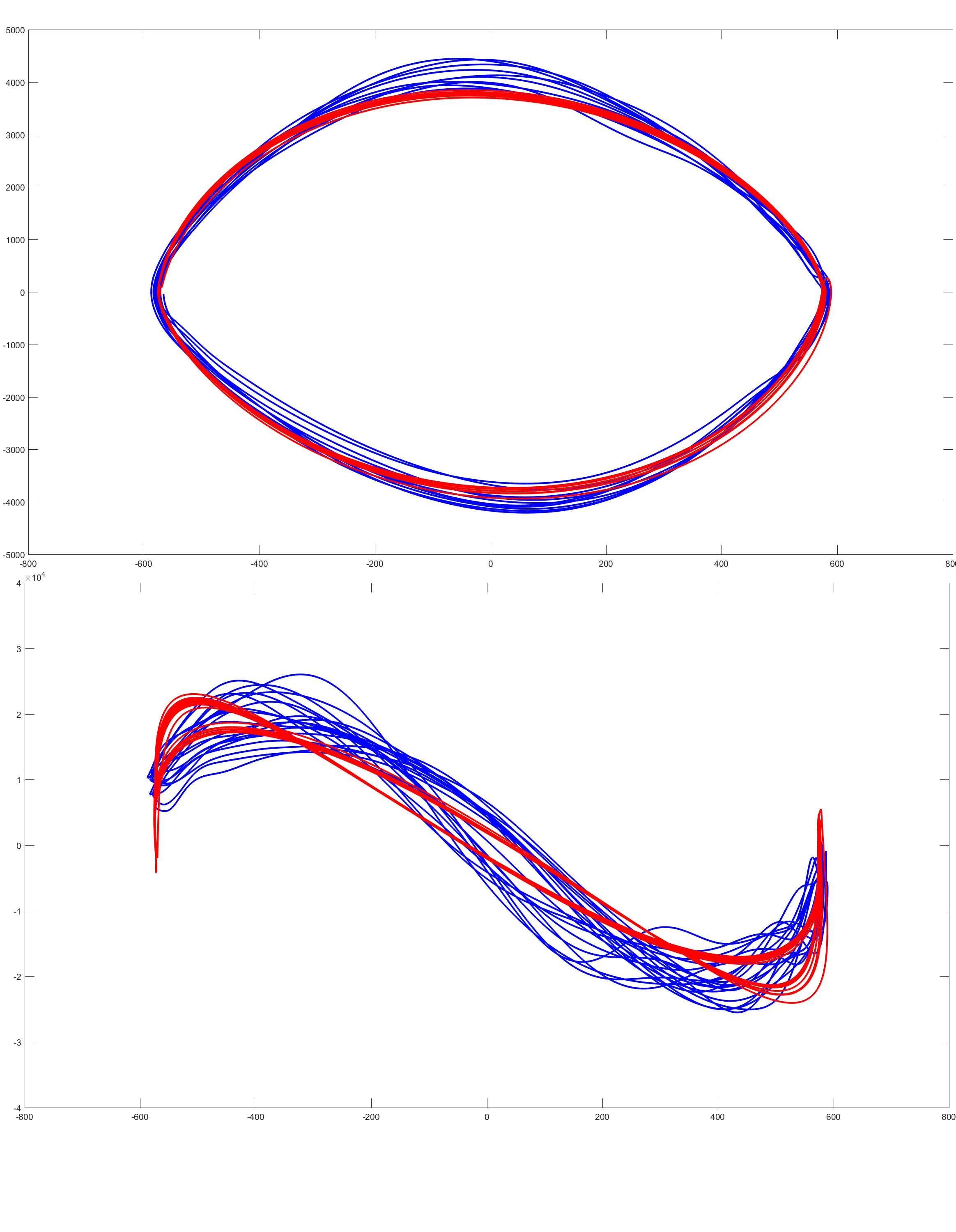

Simulating Mid-Air Interaction Trajectories via Model Predictive Control

M. Klar, F. Fischer, A. Fleig, M. Bachinski, J. Müller

MTNS 2022

We investigate the ability of Model Predictive Control (MPC) to generate humanlike movements during interaction with mid-air user interfaces, i.e., pointing in virtual reality, using a state-of-the-art biomechanical model. This model-based approach enables the analysis of interaction techniques, e.g., in terms of ergonomics and effort, without the need for extensive user studies. The model is partly a black box implemented in the MuJoCo physics engine, requiring either gradient-free optimization algorithms or a computationally expensive gradient approximation. This makes it even more important to choose optimization parameters such as the objective function or the MPC horizon length wisely. In this work, we compare various objective functions in terms of how well the simulated trajectories match real ones obtained from motion capturing. For the most promising objective function, we then analyze the effects of the horizon length and of the cost weights.

[pdf]

M. Klar, F. Fischer, A. Fleig, M. Bachinski, J. Müller

MTNS 2022

We investigate the ability of Model Predictive Control (MPC) to generate humanlike movements during interaction with mid-air user interfaces, i.e., pointing in virtual reality, using a state-of-the-art biomechanical model. This model-based approach enables the analysis of interaction techniques, e.g., in terms of ergonomics and effort, without the need for extensive user studies. The model is partly a black box implemented in the MuJoCo physics engine, requiring either gradient-free optimization algorithms or a computationally expensive gradient approximation. This makes it even more important to choose optimization parameters such as the objective function or the MPC horizon length wisely. In this work, we compare various objective functions in terms of how well the simulated trajectories match real ones obtained from motion capturing. For the most promising objective function, we then analyze the effects of the horizon length and of the cost weights.

[pdf]

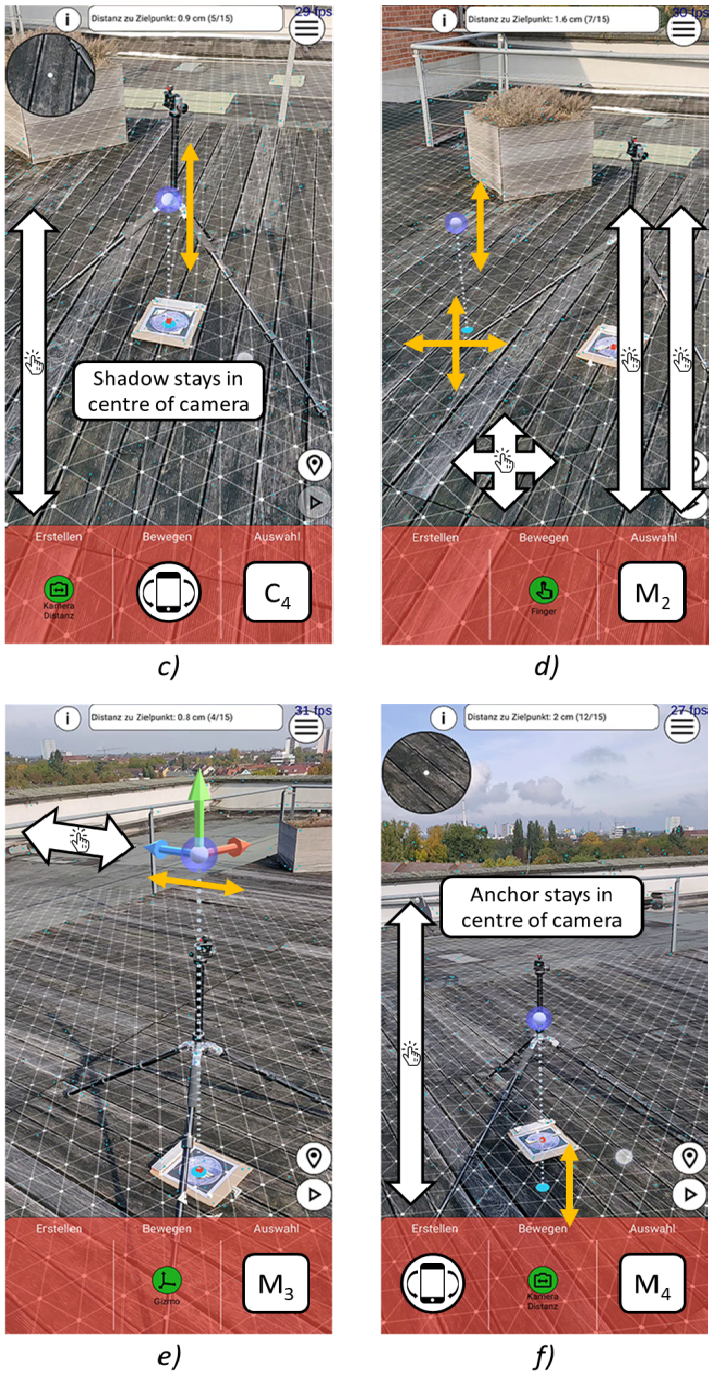

Interaction techniques for 3d-positioning objects in mobile augmented reality

C.-P. Hellmuth, M. Bachinski, J. Müller

ACM ICMI 2021

This paper explores interaction techniques for positioning objects in 3D-space during instantiation and movement interactions in mobile augmented reality. We designed the methods for 3D-objects positioning (no rotation or scaling) based on camera position and orientation, touch interaction, and combinations of these modalities. We consider four interaction techniques for creation: three new techniques and an existing one, and four techniques for moving: two new techniques and another two from previous work. We implemented all interaction methods within a smartphone application and used it as a basis for the experimental evaluation. We evaluated the interaction methods in a comparative user study (N=12): The touch-based methods outperform the camera-based techniques in perceived workload and accuracy. Both are comparable regarding the task completion time. The multimodal methods performed worse than the methods based on individual modalities both in terms of performance and workload. We discuss the implications of these findings to the HCI research and provide corresponding design recommendations. For example, we recommend avoiding the combination of camera and touch-based methods for a simultaneous interaction, as they interfere with each other and introduce jitter and inaccuracies in the user input.

[pdf]

C.-P. Hellmuth, M. Bachinski, J. Müller

ACM ICMI 2021

This paper explores interaction techniques for positioning objects in 3D-space during instantiation and movement interactions in mobile augmented reality. We designed the methods for 3D-objects positioning (no rotation or scaling) based on camera position and orientation, touch interaction, and combinations of these modalities. We consider four interaction techniques for creation: three new techniques and an existing one, and four techniques for moving: two new techniques and another two from previous work. We implemented all interaction methods within a smartphone application and used it as a basis for the experimental evaluation. We evaluated the interaction methods in a comparative user study (N=12): The touch-based methods outperform the camera-based techniques in perceived workload and accuracy. Both are comparable regarding the task completion time. The multimodal methods performed worse than the methods based on individual modalities both in terms of performance and workload. We discuss the implications of these findings to the HCI research and provide corresponding design recommendations. For example, we recommend avoiding the combination of camera and touch-based methods for a simultaneous interaction, as they interfere with each other and introduce jitter and inaccuracies in the user input.

[pdf]

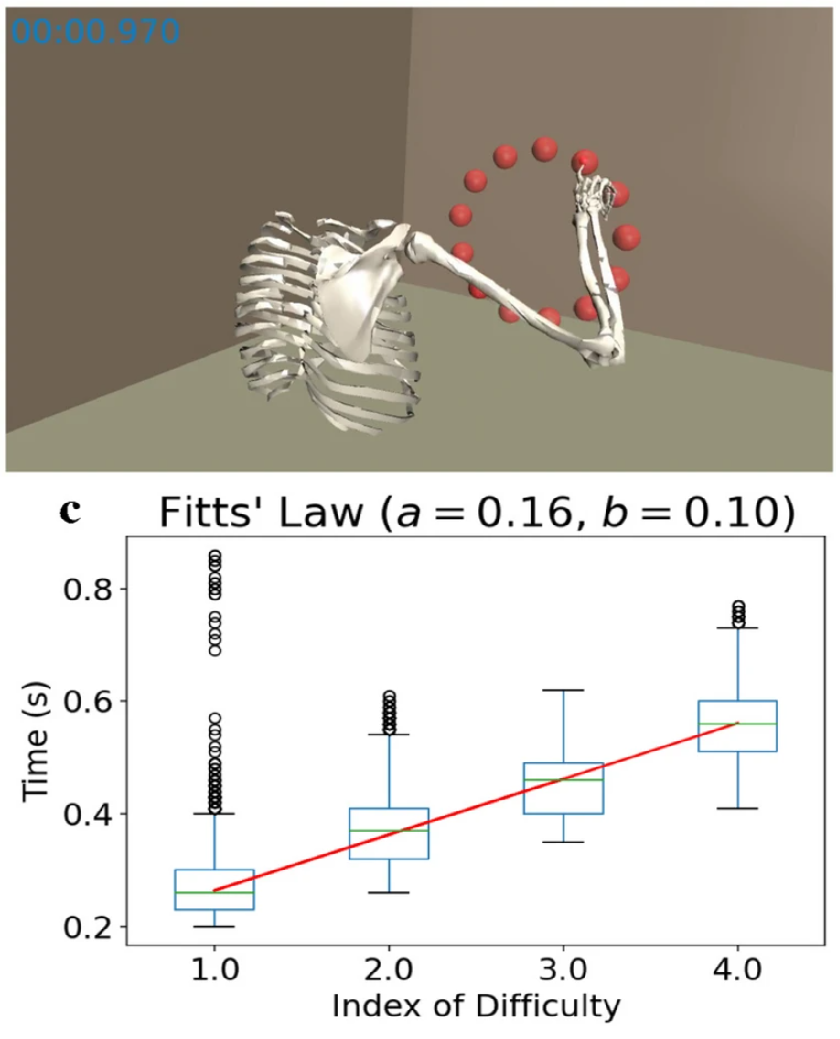

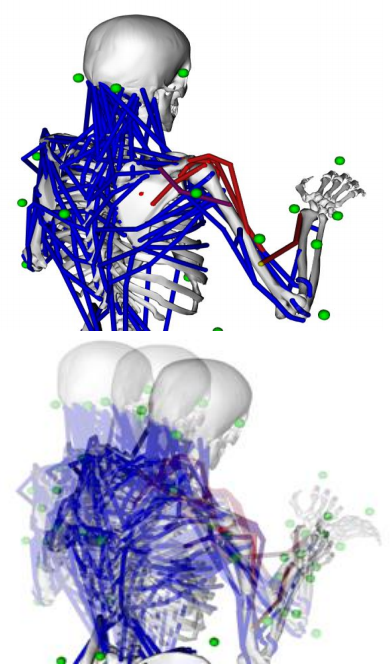

Reinforcement learning control of a biomechanical model of the upper extremity

F. Fischer, M. Bachinski, M. Klar, A. Fleig, J. Müller

Scientific Reports 11.1 (2021): 14445.

Among the infinite number of possible movements that can be produced, humans are commonly assumed to choose those that optimize criteria such as minimizing movement time, subject to certain movement constraints like signal-dependent and constant motor noise. While so far these assumptions have only been evaluated for simplified point-mass or planar models, we address the question of whether they can predict reaching movements in a full skeletal model of the human upper extremity. We learn a control policy using a motor babbling approach as implemented in reinforcement learning, using aimed movements of the tip of the right index finger towards randomly placed 3D targets of varying size. We use a state-of-the-art biomechanical model, which includes seven actuated degrees of freedom. To deal with the curse of dimensionality, we use a simplified second-order muscle model, acting at each degree of freedom instead of individual muscles. The results confirm that the assumptions of signal-dependent and constant motor noise, together with the objective of movement time minimization, are sufficient for a state-of-the-art skeletal model of the human upper extremity to reproduce complex phenomena of human movement, in particular Fitts’ Law and the 2/3 Power Law. This result supports the notion that control of the complex human biomechanical system can plausibly be determined by a set of simple assumptions and can easily be learned.

[pdf]

F. Fischer, M. Bachinski, M. Klar, A. Fleig, J. Müller

Scientific Reports 11.1 (2021): 14445.

Among the infinite number of possible movements that can be produced, humans are commonly assumed to choose those that optimize criteria such as minimizing movement time, subject to certain movement constraints like signal-dependent and constant motor noise. While so far these assumptions have only been evaluated for simplified point-mass or planar models, we address the question of whether they can predict reaching movements in a full skeletal model of the human upper extremity. We learn a control policy using a motor babbling approach as implemented in reinforcement learning, using aimed movements of the tip of the right index finger towards randomly placed 3D targets of varying size. We use a state-of-the-art biomechanical model, which includes seven actuated degrees of freedom. To deal with the curse of dimensionality, we use a simplified second-order muscle model, acting at each degree of freedom instead of individual muscles. The results confirm that the assumptions of signal-dependent and constant motor noise, together with the objective of movement time minimization, are sufficient for a state-of-the-art skeletal model of the human upper extremity to reproduce complex phenomena of human movement, in particular Fitts’ Law and the 2/3 Power Law. This result supports the notion that control of the complex human biomechanical system can plausibly be determined by a set of simple assumptions and can easily be learned.

[pdf]

Dynamics of Aimed Mid-air Movements

M. Bachinski, J. Müller

ACM CHI 2020

Mid-air arm movements are ubiquitous in VR, AR, and gestural interfaces. While mouse movements have received some attention, the dynamics of mid-air movements are understudied in HCI. In this paper we present an exploratory analysis of the dynamics of aimed mid-air movements. We explore the 3rd order lag (3OL) and existing 2nd order lag (2OL) models for modeling these dynamics. For a majority of movements the 3OL model captures mid-air dynamics better, in particular acceleration. The models can effectively predict the complete time series of position, velocity and acceleration of aimed movements given an initial state and a target using three (2OL) or four (3OL) free parameters.

[pdf]

M. Bachinski, J. Müller

ACM CHI 2020

Mid-air arm movements are ubiquitous in VR, AR, and gestural interfaces. While mouse movements have received some attention, the dynamics of mid-air movements are understudied in HCI. In this paper we present an exploratory analysis of the dynamics of aimed mid-air movements. We explore the 3rd order lag (3OL) and existing 2nd order lag (2OL) models for modeling these dynamics. For a majority of movements the 3OL model captures mid-air dynamics better, in particular acceleration. The models can effectively predict the complete time series of position, velocity and acceleration of aimed movements given an initial state and a target using three (2OL) or four (3OL) free parameters.

[pdf]

Performance and Experience of Throwing in Virtual Reality

T. Zindulka, M. Bachinski, J. Müller

ACM CHI 2020

Throwing is a fundamental movement in many sports and games. Given this, accurate throwing in VR applications today is surprisingly difficult. In this paper we explore the nature of the difficulties of throwing in VR in more detail. We present the results of a user study comparing throwing in VR and in the physical world. In a short pre-study with 3 participants we determine an optimal number of throwing repetitions for the main study by exploring the learning curve and subjective fatigue of throwing in VR. In the main study, with 12 participants, we find that throwing precision and accuracy in VR are lower particularly in the distance and height dimensions. It also requires more effort and exhibits different kinematic patterns.

[pdf]

T. Zindulka, M. Bachinski, J. Müller

ACM CHI 2020

Throwing is a fundamental movement in many sports and games. Given this, accurate throwing in VR applications today is surprisingly difficult. In this paper we explore the nature of the difficulties of throwing in VR in more detail. We present the results of a user study comparing throwing in VR and in the physical world. In a short pre-study with 3 participants we determine an optimal number of throwing repetitions for the main study by exploring the learning curve and subjective fatigue of throwing in VR. In the main study, with 12 participants, we find that throwing precision and accuracy in VR are lower particularly in the distance and height dimensions. It also requires more effort and exhibits different kinematic patterns.

[pdf]

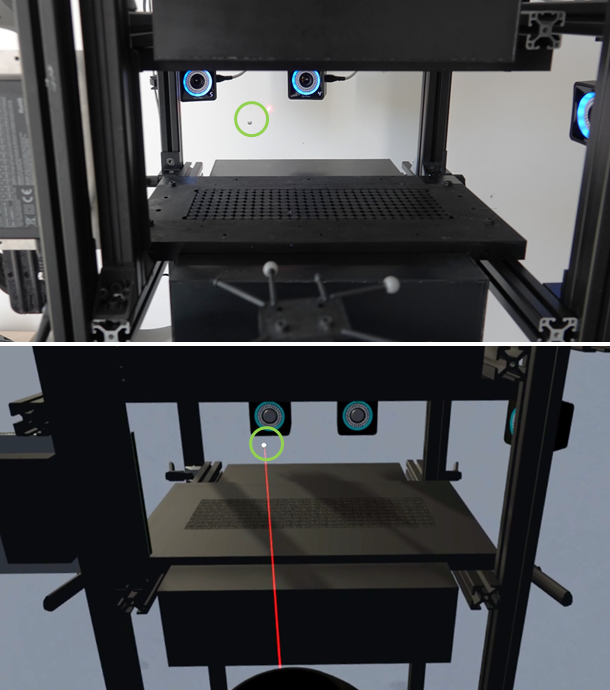

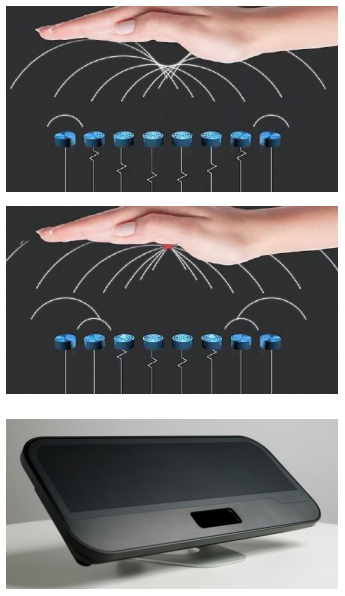

Levitation Simulator: Prototyping Ultrasonic Levitation Interfaces in Virtual Reality

V. Paneva, M. Bachinski, J. Müller

ACM CHI 2020

We present the Levitation Simulator, a system that enables researchers and designers to iteratively develop and prototype levitation interface ideas in Virtual Reality. This includes user tests and formal experiments. We derive a model of the movement of a levitating particle in such an interface. Based on this, we develop an interactive simulation of the levitation interface in VR, which exhibits the dynamical properties of the real interface. The results of a Fitts’ Law pointing study show that the Levitation Simulator enables performance, comparable to the real prototype. We developed the first two interactive games, dedicated for levitation interfaces: LeviShooter and BeadBounce, in the Levitation Simulator, and then implemented them on the real interface. Our results indicate that participants experienced similar levels of user engagement when playing the games, in the two environments. We share our Levitation Simulator as Open Source, thereby democratizing levitation research, without the need for a levitation apparatus.

[pdf]

V. Paneva, M. Bachinski, J. Müller

ACM CHI 2020

We present the Levitation Simulator, a system that enables researchers and designers to iteratively develop and prototype levitation interface ideas in Virtual Reality. This includes user tests and formal experiments. We derive a model of the movement of a levitating particle in such an interface. Based on this, we develop an interactive simulation of the levitation interface in VR, which exhibits the dynamical properties of the real interface. The results of a Fitts’ Law pointing study show that the Levitation Simulator enables performance, comparable to the real prototype. We developed the first two interactive games, dedicated for levitation interfaces: LeviShooter and BeadBounce, in the Levitation Simulator, and then implemented them on the real interface. Our results indicate that participants experienced similar levels of user engagement when playing the games, in the two environments. We share our Levitation Simulator as Open Source, thereby democratizing levitation research, without the need for a levitation apparatus.

[pdf]

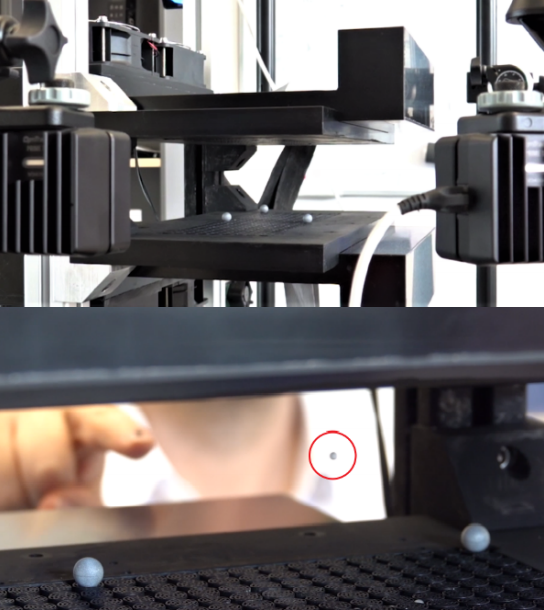

LeviCursor: Dexterous Interaction with a Levitating Object

M. Bachinski, V. Paneva, J. Müller

ACM ISS 2018

We present LeviCursor, a method for interactively moving a physical, levitating particle in 3D with high agility. The levitating object can move continuously and smoothly in any direction. We optimize the transducer phases for each possible levitation point independently. Using precomputation, our system can determine the optimal transducer phases within a few microseconds and achieves round-trip latencies of 15 ms. Due to our interpolation scheme, the levitated object can be controlled almost instantaneously with sub-millimeter accuracy. We present a particle stabilization mechanism which ensures the levitating particle is always in the main levitation trap. Lastly, we conduct the first Fitts' law-type pointing study with a real 3D cursor, where participants control the movement of the levitated cursor between two physical targets. The results of the user study demonstrate that using LeviCursor, users reach performance comparable to that of a mouse pointer.

[pdf]

M. Bachinski, V. Paneva, J. Müller

ACM ISS 2018

We present LeviCursor, a method for interactively moving a physical, levitating particle in 3D with high agility. The levitating object can move continuously and smoothly in any direction. We optimize the transducer phases for each possible levitation point independently. Using precomputation, our system can determine the optimal transducer phases within a few microseconds and achieves round-trip latencies of 15 ms. Due to our interpolation scheme, the levitated object can be controlled almost instantaneously with sub-millimeter accuracy. We present a particle stabilization mechanism which ensures the levitating particle is always in the main levitation trap. Lastly, we conduct the first Fitts' law-type pointing study with a real 3D cursor, where participants control the movement of the levitated cursor between two physical targets. The results of the user study demonstrate that using LeviCursor, users reach performance comparable to that of a mouse pointer.

[pdf]

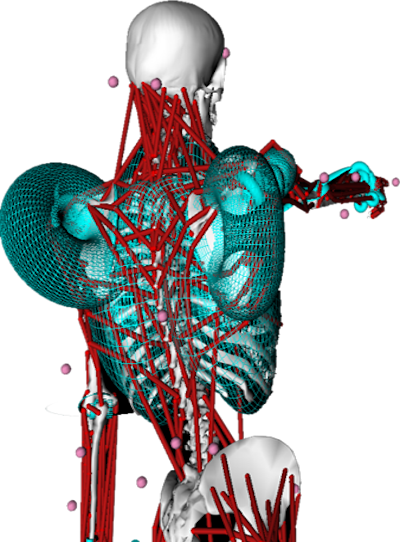

Introducing postural variability improves the distribution of muscular loads during mid-air gestural interaction

F. Nunnari, M. Bachinski, A. Heloir

ACM MIG '16: Proceedings of the 9th International Conference on Motion in Games

Only time will tell if motion-controlled systems are the future of gaming and other industries and if mid-air gestural input will eventually offer a more intuitive way to play games and interact with computers. Whatever the eventual outcome, it is necessary to assess the ergonomics of mid-air input metaphors and propose design guidelines which will guarantee their safe use in the long run. This paper presents an ergonomic study showing how to mitigate the muscular strain induced by prolonged mid-air gesture interaction by encouraging postural shifts during the interaction. A quantitative and qualitative user study involving 30 subjects validates the setup. The simulated musculo-skeletal load values support our hypothesis and show a statistically significant 19% decrease in average muscle loads on the shoulder, neck, and back area in the modified condition compared to the baseline.

[pdf]

F. Nunnari, M. Bachinski, A. Heloir

ACM MIG '16: Proceedings of the 9th International Conference on Motion in Games

Only time will tell if motion-controlled systems are the future of gaming and other industries and if mid-air gestural input will eventually offer a more intuitive way to play games and interact with computers. Whatever the eventual outcome, it is necessary to assess the ergonomics of mid-air input metaphors and propose design guidelines which will guarantee their safe use in the long run. This paper presents an ergonomic study showing how to mitigate the muscular strain induced by prolonged mid-air gesture interaction by encouraging postural shifts during the interaction. A quantitative and qualitative user study involving 30 subjects validates the setup. The simulated musculo-skeletal load values support our hypothesis and show a statistically significant 19% decrease in average muscle loads on the shoulder, neck, and back area in the modified condition compared to the baseline.

[pdf]

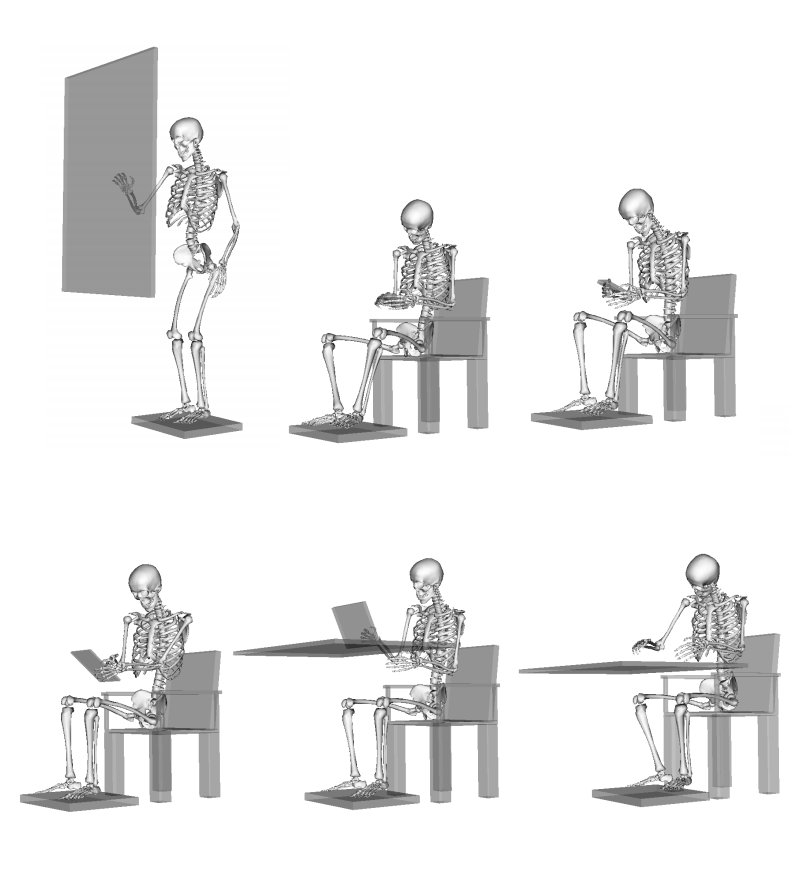

Performance and Ergonomics of Touch Surfaces: A Comparative Study using Biomechanical Simulation

M. Bachynskyi, G. Palmas, A. Oulasvirta, J. Steimle, T. Weinkauf

ACM CHI 2015

Although different types of touch surfaces have gained extensive attention in HCI, this is the first work to directly compare them for two critical factors: performance and ergonomics. Our data come from a pointing task (N=40) carried out on five common touch surface types: public display (large, vertical, standing), tabletop (large, horizontal, seated), laptop (medium, adjustably tilted, seated), tablet (seated, in hand), and smartphone (single- and two-handed input). Ergonomics indices were calculated from biomechanical simulations of motion capture data combined with recordings of external forces. We provide an extensive dataset for researchers and report the first analyses of similarities and differences that are attributable to the different postures and movement ranges.

[pdf]

M. Bachynskyi, G. Palmas, A. Oulasvirta, J. Steimle, T. Weinkauf

ACM CHI 2015

Although different types of touch surfaces have gained extensive attention in HCI, this is the first work to directly compare them for two critical factors: performance and ergonomics. Our data come from a pointing task (N=40) carried out on five common touch surface types: public display (large, vertical, standing), tabletop (large, horizontal, seated), laptop (medium, adjustably tilted, seated), tablet (seated, in hand), and smartphone (single- and two-handed input). Ergonomics indices were calculated from biomechanical simulations of motion capture data combined with recordings of external forces. We provide an extensive dataset for researchers and report the first analyses of similarities and differences that are attributable to the different postures and movement ranges.

[pdf]

Informing the Design of Novel Input Methods with Muscle Coactivation Clustering

M. Bachynskyi, G. Palmas, A. Oulasvirta, T. Weinkauf

ACM ToCHI, Volume: 21, Issue: 6, 2015, p. 1-25

This article presents a novel summarization of biomechanical and performance data for user interface designers. Previously, there was no simple way for designers to predict how the location, direction, velocity, precision, or amplitude of users’ movement affects performance and fatigue. We cluster muscle coactivation data from a 3D pointing task covering the whole reachable space of the arm. We identify 11 clusters of pointing movements with distinct muscular, spatio-temporal, and performance properties. We discuss their use as heuristics when designing for 3D pointing.

[pdf]

M. Bachynskyi, G. Palmas, A. Oulasvirta, T. Weinkauf

ACM ToCHI, Volume: 21, Issue: 6, 2015, p. 1-25

This article presents a novel summarization of biomechanical and performance data for user interface designers. Previously, there was no simple way for designers to predict how the location, direction, velocity, precision, or amplitude of users’ movement affects performance and fatigue. We cluster muscle coactivation data from a 3D pointing task covering the whole reachable space of the arm. We identify 11 clusters of pointing movements with distinct muscular, spatio-temporal, and performance properties. We discuss their use as heuristics when designing for 3D pointing.

[pdf]

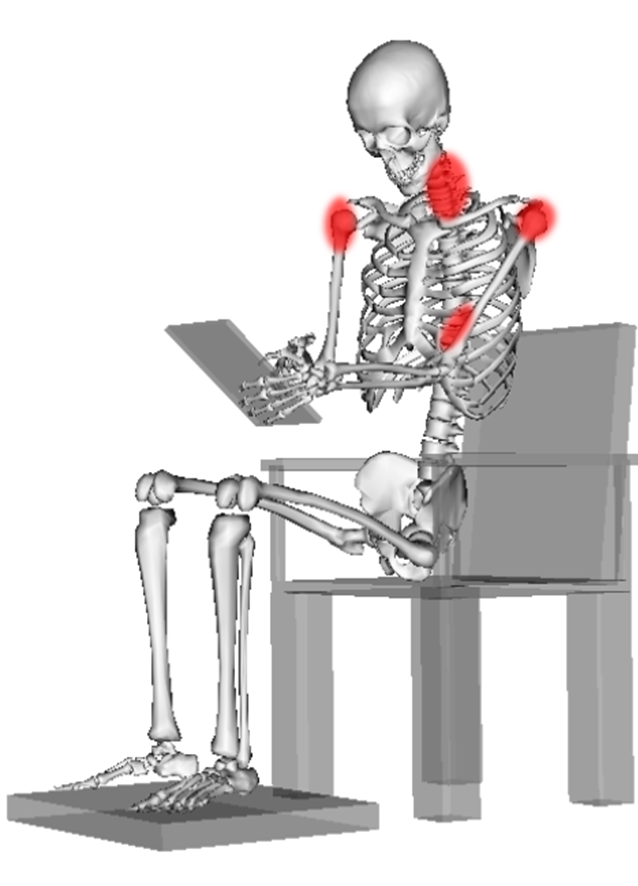

Physical ergonomics of tablet interaction while sitting

M. Bachynskyi

39th Annual Meeting of the American Society of Biomechanics

We present physical ergonomics assessment of typical tablet device usage. Tablet devices are becoming widespread and often even displace personal computers and laptops. However, while physical ergonomics of PCs and laptops was extensively studied in the past, there is only little knowledge of ergonomics of tablet devices. In particular the user assessment is complex due to portability of tablets, which allows a variety of tablet locations, orientations and holds which can be adopted by users. The purpose of this work was to identify typical postures, set of recruited muscles and health risks related to the tablet interaction.

[pdf]

M. Bachynskyi

39th Annual Meeting of the American Society of Biomechanics

We present physical ergonomics assessment of typical tablet device usage. Tablet devices are becoming widespread and often even displace personal computers and laptops. However, while physical ergonomics of PCs and laptops was extensively studied in the past, there is only little knowledge of ergonomics of tablet devices. In particular the user assessment is complex due to portability of tablets, which allows a variety of tablet locations, orientations and holds which can be adopted by users. The purpose of this work was to identify typical postures, set of recruited muscles and health risks related to the tablet interaction.

[pdf]

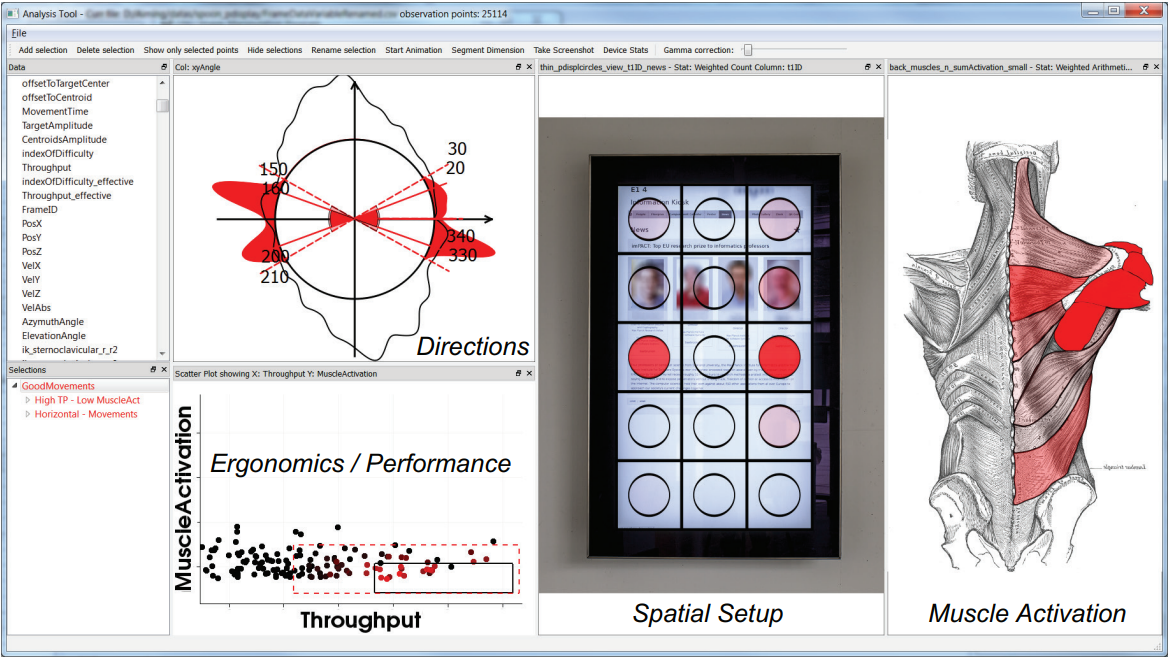

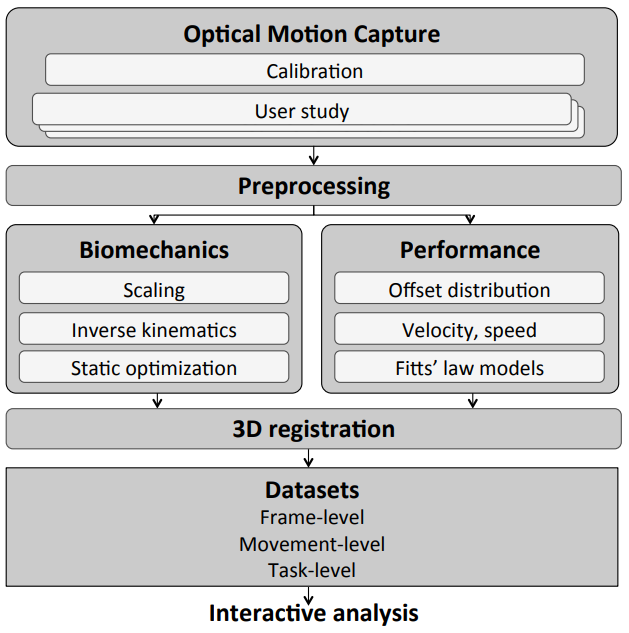

MovExp: A Versatile Visualization Tool for Human-Computer Interaction Studies with 3D Performance and Biomechanical Data

G. Palmas, M. Bachynskyi, A. Oulasvirta, H.-P. Seidel, T. Weinkauf

IEEE Transactions on Visualization and Computer Graphics, Volume: 20, Issue: 12, Dec. 31 2014

In Human-Computer Interaction (HCI), experts seek to evaluate and compare the performance and ergonomics of user interfaces. Recently, a novel cost-efficient method for estimating physical ergonomics and performance has been introduced to HCI. It is based on optical motion capture and biomechanical simulation. It provides a rich source for analyzing human movements summarized in a multidimensional data set. Existing visualization tools do not sufficiently support the HCI experts in analyzing this data. We identified two shortcomings. First, appropriate visual encodings are missing particularly for the biomechanical aspects of the data. Second, the physical setup of the user interface cannot be incorporated explicitly into existing tools. We present MovExp, a versatile visualization tool that supports the evaluation of user interfaces. In particular, it can be easily adapted by the HCI experts to include the physical setup that is being evaluated, and visualize the data on top of it. Furthermore, it provides a variety of visual encodings to communicate muscular loads, movement directions, and other specifics of HCI studies that employ motion capture and biomechanical simulation. In this design study, we follow a problem-driven research approach. Based on a formalization of the visualization needs and the data structure, we formulate technical requirements for the visualization tool and present novel solutions to the analysis needs of the HCI experts. We show the utility of our tool with four case studies from the daily work of our HCI experts.

[pdf]

G. Palmas, M. Bachynskyi, A. Oulasvirta, H.-P. Seidel, T. Weinkauf

IEEE Transactions on Visualization and Computer Graphics, Volume: 20, Issue: 12, Dec. 31 2014

In Human-Computer Interaction (HCI), experts seek to evaluate and compare the performance and ergonomics of user interfaces. Recently, a novel cost-efficient method for estimating physical ergonomics and performance has been introduced to HCI. It is based on optical motion capture and biomechanical simulation. It provides a rich source for analyzing human movements summarized in a multidimensional data set. Existing visualization tools do not sufficiently support the HCI experts in analyzing this data. We identified two shortcomings. First, appropriate visual encodings are missing particularly for the biomechanical aspects of the data. Second, the physical setup of the user interface cannot be incorporated explicitly into existing tools. We present MovExp, a versatile visualization tool that supports the evaluation of user interfaces. In particular, it can be easily adapted by the HCI experts to include the physical setup that is being evaluated, and visualize the data on top of it. Furthermore, it provides a variety of visual encodings to communicate muscular loads, movement directions, and other specifics of HCI studies that employ motion capture and biomechanical simulation. In this design study, we follow a problem-driven research approach. Based on a formalization of the visualization needs and the data structure, we formulate technical requirements for the visualization tool and present novel solutions to the analysis needs of the HCI experts. We show the utility of our tool with four case studies from the daily work of our HCI experts.

[pdf]

Is motion capture-based biomechanical simulation valid for HCI studies?: study and implications

M. Bachynskyi, A. Oulasvirta, G. Palmas, T. Weinkauf

ACM CHI 2014

Motion-capture-based biomechanical simulation is a non-invasive analysis method that yields a rich description of posture, joint, and muscle activity in human movement. The method is presently gaining ground in sports, medicine, and industrial ergonomics, but it also bears great potential for studies in HCI where the physical ergonomics of a design is important. To make the method more broadly accessible, we study its predictive validity for movements and users typical to studies in HCI. We discuss the sources of error in biomechanical simulation and present results from two validation studies conducted with a state-of-the-art system. Study I tested aimed movements ranging from multitouch gestures to dancing, finding out that the critical limiting factor is the size of movement. Study II compared muscle activation predictions to surface-EMG recordings in a 3D pointing task. The data shows medium-to-high validity that is, however, constrained by some characteristics of the movement and the user. We draw concrete recommendations to practitioners and discuss challenges to developing the method further.

[pdf]

M. Bachynskyi, A. Oulasvirta, G. Palmas, T. Weinkauf

ACM CHI 2014

Motion-capture-based biomechanical simulation is a non-invasive analysis method that yields a rich description of posture, joint, and muscle activity in human movement. The method is presently gaining ground in sports, medicine, and industrial ergonomics, but it also bears great potential for studies in HCI where the physical ergonomics of a design is important. To make the method more broadly accessible, we study its predictive validity for movements and users typical to studies in HCI. We discuss the sources of error in biomechanical simulation and present results from two validation studies conducted with a state-of-the-art system. Study I tested aimed movements ranging from multitouch gestures to dancing, finding out that the critical limiting factor is the size of movement. Study II compared muscle activation predictions to surface-EMG recordings in a 3D pointing task. The data shows medium-to-high validity that is, however, constrained by some characteristics of the movement and the user. We draw concrete recommendations to practitioners and discuss challenges to developing the method further.

[pdf]

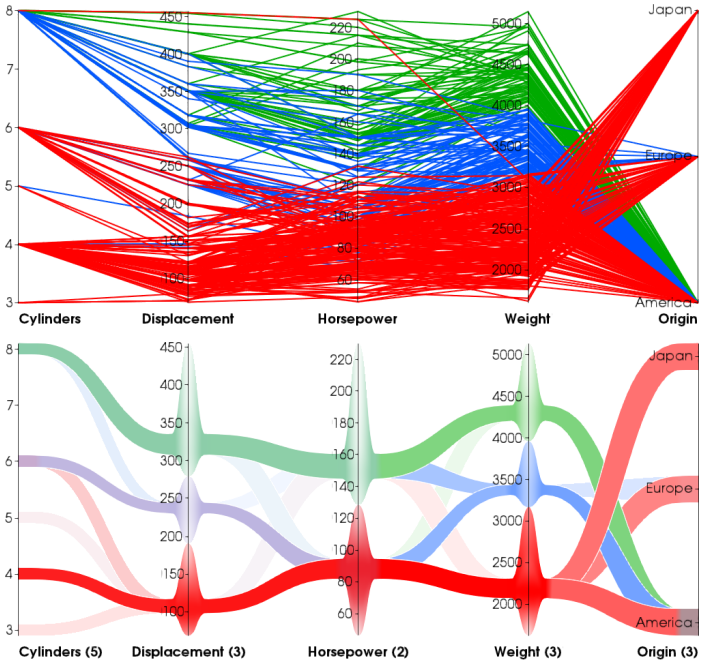

An edge-bundling layout for interactive parallel coordinates

G. Palmas, M. Bachynskyi, A. Oulasvirta, H.-P. Seidel, T. Weinkauf

IEEE Pacific Visualization Symposium 2014

Parallel Coordinates is an often used visualization method for multidimensional data sets. Its main challenges for large data sets are visual clutter and over plotting which hamper the recognition of patterns in the data. We present an edge-bundling method using density-based clustering for each dimension. This reduces clutter and provides a faster overview of clusters and trends. Moreover, it allows rendering the clustered lines using polygons, decreasing rendering time remarkably. In addition, we design interactions to support multidimensional clustering with this method. A user study shows improvements over the classic parallel coordinates plot in two user tasks: correlation estimation and subset tracing.

[pdf]

G. Palmas, M. Bachynskyi, A. Oulasvirta, H.-P. Seidel, T. Weinkauf

IEEE Pacific Visualization Symposium 2014

Parallel Coordinates is an often used visualization method for multidimensional data sets. Its main challenges for large data sets are visual clutter and over plotting which hamper the recognition of patterns in the data. We present an edge-bundling method using density-based clustering for each dimension. This reduces clutter and provides a faster overview of clusters and trends. Moreover, it allows rendering the clustered lines using polygons, decreasing rendering time remarkably. In addition, we design interactions to support multidimensional clustering with this method. A user study shows improvements over the classic parallel coordinates plot in two user tasks: correlation estimation and subset tracing.

[pdf]

PhD thesis

Biomechanical models for human-computer interaction

Universität des Saarlandes 2018

Post-desktop user interfaces, such as smartphones, tablets, interactive tabletops, public displays and mid-air interfaces, already are a ubiquitous part of everyday human life, or have the potential to be. One of the key features of these interfaces is the reduced number or even absence of input movement constraints imposed by a device form-factor. This freedom is advantageous for users, allowing them to interact with computers using more natural limb movements; however, it is a source of 4 issues for research and design of post-desktop interfaces which make traditional analysis methods inefficient: the new movement space is orders of magnitude larger than the one analyzed for traditional desktops; the existing knowledge on post-desktop input methods is sparse and sporadic; the movement space is non-uniform with respect to performance; and traditional methods are ineffective or inefficient in tackling physical ergonomics pitfalls in post-desktop interfaces. These issues lead to the research problem of efficient assessment, analysis and design methods for high-throughput ergonomic post-desktop interfaces. To solve this research problem and support researchers and designers, this thesis proposes efficient experiment- and model-based assessment methods for post-desktop user interfaces. We achieve this through the following contributions: - adopt optical motion capture and biomechanical simulation for HCI experiments as a versatile source of both performance and ergonomics data describing an input method; - identify applicability limits of the method for a range of HCI tasks; - validate the method outputs against ground truth recordings in typical HCI setting; - demonstrate the added value of the method in analysis of performance and ergonomics of touchscreen devices; and - summarize performance and ergonomics of a movement space through a clustering of physiological data. The proposed method successfully deals with the 4 above-mentioned issues of post-desktop input. The efficiency of the methods makes it possible to effectively tackle the issue of large post-desktop movement spaces both at early design stages (through a generic model of a movement space) as well as at later design stages (through user studies). The method provides rich data on physical ergonomics (joint angles and moments, muscle forces and activations, energy expenditure and fatigue), making it possible to solve the issue of ergonomics pitfalls. Additionally, the method provides performance data (speed, accuracy and throughput) which can be related to the physiological data to solve the issue of non-uniformity of movement space. In our adaptation the method does not require experimenters to have specialized expertise, thus making it accessible to a wide range of researchers and designers and contributing towards the solution of the issue of post-desktop knowledge sparsity.

[pdf]

Universität des Saarlandes 2018

Post-desktop user interfaces, such as smartphones, tablets, interactive tabletops, public displays and mid-air interfaces, already are a ubiquitous part of everyday human life, or have the potential to be. One of the key features of these interfaces is the reduced number or even absence of input movement constraints imposed by a device form-factor. This freedom is advantageous for users, allowing them to interact with computers using more natural limb movements; however, it is a source of 4 issues for research and design of post-desktop interfaces which make traditional analysis methods inefficient: the new movement space is orders of magnitude larger than the one analyzed for traditional desktops; the existing knowledge on post-desktop input methods is sparse and sporadic; the movement space is non-uniform with respect to performance; and traditional methods are ineffective or inefficient in tackling physical ergonomics pitfalls in post-desktop interfaces. These issues lead to the research problem of efficient assessment, analysis and design methods for high-throughput ergonomic post-desktop interfaces. To solve this research problem and support researchers and designers, this thesis proposes efficient experiment- and model-based assessment methods for post-desktop user interfaces. We achieve this through the following contributions: - adopt optical motion capture and biomechanical simulation for HCI experiments as a versatile source of both performance and ergonomics data describing an input method; - identify applicability limits of the method for a range of HCI tasks; - validate the method outputs against ground truth recordings in typical HCI setting; - demonstrate the added value of the method in analysis of performance and ergonomics of touchscreen devices; and - summarize performance and ergonomics of a movement space through a clustering of physiological data. The proposed method successfully deals with the 4 above-mentioned issues of post-desktop input. The efficiency of the methods makes it possible to effectively tackle the issue of large post-desktop movement spaces both at early design stages (through a generic model of a movement space) as well as at later design stages (through user studies). The method provides rich data on physical ergonomics (joint angles and moments, muscle forces and activations, energy expenditure and fatigue), making it possible to solve the issue of ergonomics pitfalls. Additionally, the method provides performance data (speed, accuracy and throughput) which can be related to the physiological data to solve the issue of non-uniformity of movement space. In our adaptation the method does not require experimenters to have specialized expertise, thus making it accessible to a wide range of researchers and designers and contributing towards the solution of the issue of post-desktop knowledge sparsity.

[pdf]

Book chapters, workshops, and other publications

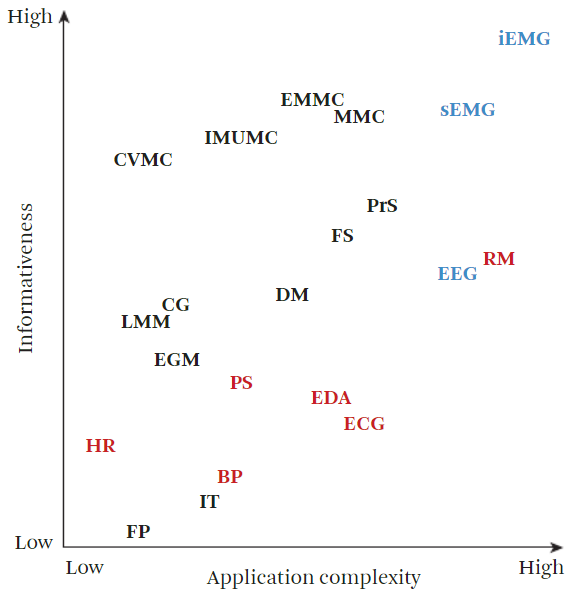

Ergonomics for the design of multimodal interfaces

A. Heloir, F. Nunnari, M. Bachynskyi

The Handbook of Multimodal-Multisensor Interfaces: Language Processing, Software, Commercialization, and Emerging Directions. Volume 3, July 2019, p. 263–304

This chapter presents an overview of ergonomic studies in multimodal interfaces, starting with the main challenges posed by postdesktop interfaces and introducing the importance of ergonomic studies. Then, physical ergonomics is introduced. Next, the focus is placed on motion capturebased biomechanical simulation as a universal method for asseing multimodal interaction design. Finally, open questions and prospects are discussed.

[pdf]

A. Heloir, F. Nunnari, M. Bachynskyi

The Handbook of Multimodal-Multisensor Interfaces: Language Processing, Software, Commercialization, and Emerging Directions. Volume 3, July 2019, p. 263–304

This chapter presents an overview of ergonomic studies in multimodal interfaces, starting with the main challenges posed by postdesktop interfaces and introducing the importance of ergonomic studies. Then, physical ergonomics is introduced. Next, the focus is placed on motion capturebased biomechanical simulation as a universal method for asseing multimodal interaction design. Finally, open questions and prospects are discussed.

[pdf]

Mid-Air Haptic Interfaces for Interactive Digital Signage and Kiosks

O. Georgiou, H. Limerick, L. Corenthy, M. Perry, M. Maksymenko, S. Frish, J. Müller, M. Bachynskyi, J. R. Kim

Extended Abstracts of the CHI 2019

Digital signage systems are transitioning from static displays to rich, dynamic interactive experiences while new enabling technologies that support these interactions are also evolving. For instance, advances in computer vision and face, gaze, facial expression, body and hand-gesture recognition have enabled new ways of distal interactivity with digital content. Such possibilities are only just being adopted by advertisers and retailers, yet, they face important challenges e.g. the lack of a commonly accepted gesture, facial expressions or call-to-action set. Another common issue here is the absence of active tactile stimuli. Mid-air haptic interfaces can help alleviate these problems and aid in defining a gesture set, informing users about their interaction via haptic feedback loops, and enhancing the overall user experience. This workshop aims to examine the possibilities opened up by these technologies and discuss opportunities in designing the next generation of interactive digital signage kiosks.

[pdf]

O. Georgiou, H. Limerick, L. Corenthy, M. Perry, M. Maksymenko, S. Frish, J. Müller, M. Bachynskyi, J. R. Kim

Extended Abstracts of the CHI 2019

Digital signage systems are transitioning from static displays to rich, dynamic interactive experiences while new enabling technologies that support these interactions are also evolving. For instance, advances in computer vision and face, gaze, facial expression, body and hand-gesture recognition have enabled new ways of distal interactivity with digital content. Such possibilities are only just being adopted by advertisers and retailers, yet, they face important challenges e.g. the lack of a commonly accepted gesture, facial expressions or call-to-action set. Another common issue here is the absence of active tactile stimuli. Mid-air haptic interfaces can help alleviate these problems and aid in defining a gesture set, informing users about their interaction via haptic feedback loops, and enhancing the overall user experience. This workshop aims to examine the possibilities opened up by these technologies and discuss opportunities in designing the next generation of interactive digital signage kiosks.

[pdf]